Probably the longest title I’ve ever had for a post!

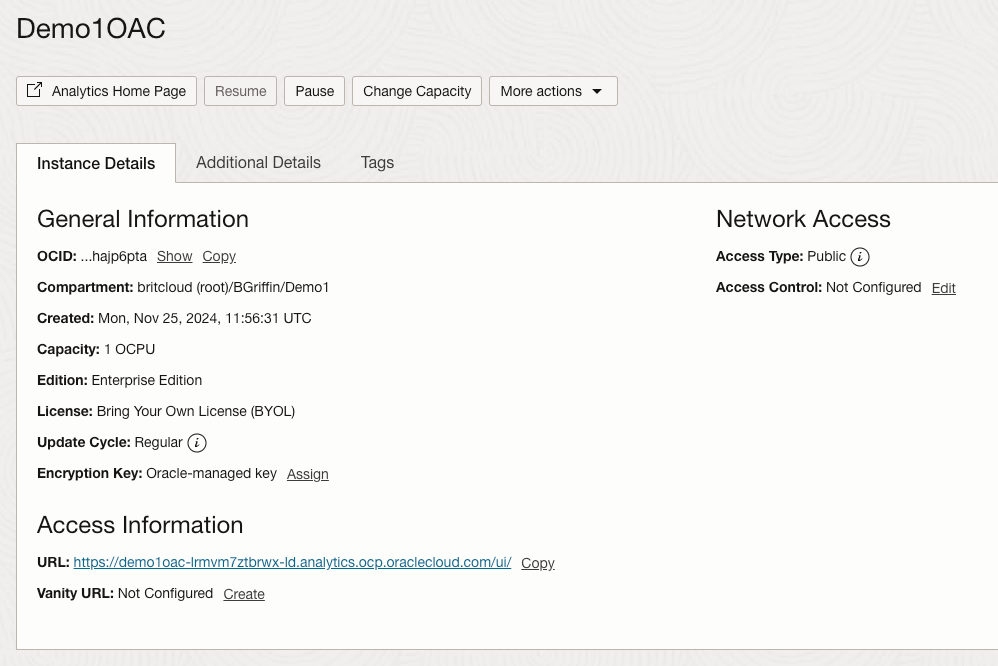

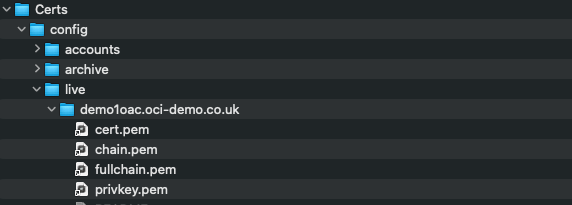

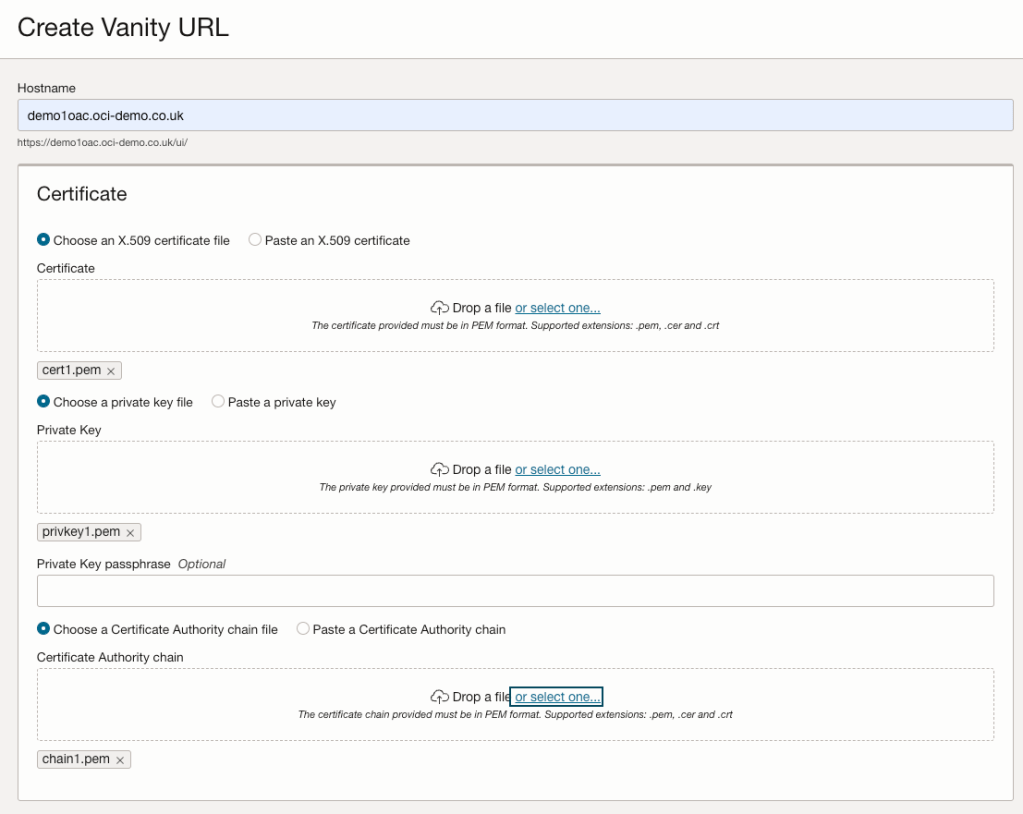

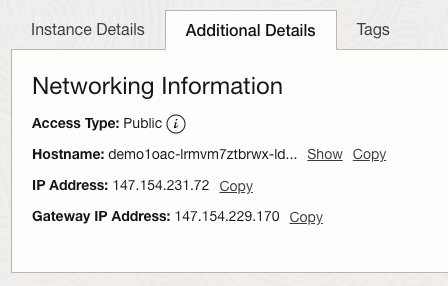

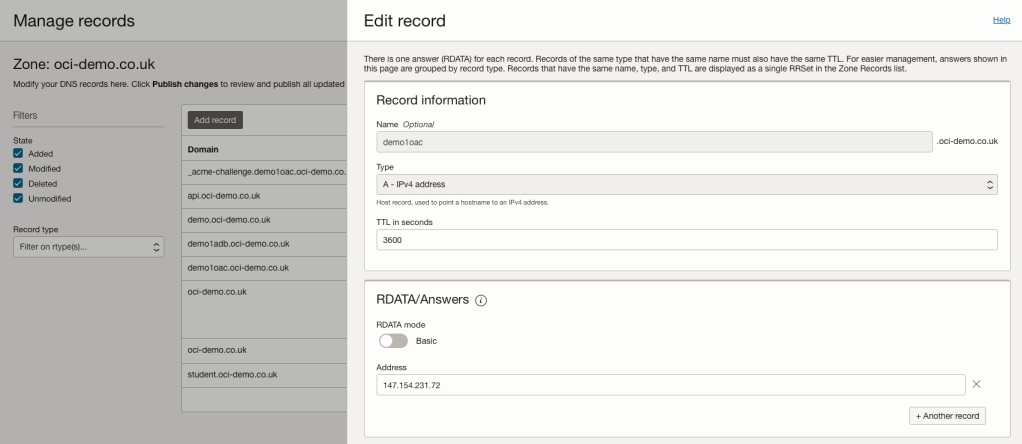

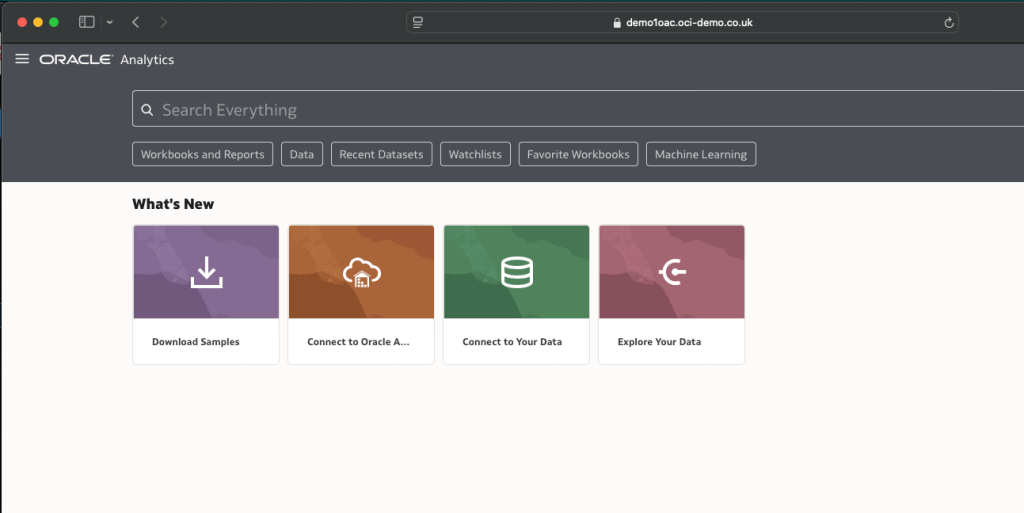

I have an Oracle Autonomous Database that I created a private endpoint for and published via a public load balancer in OCI……my reason for this complexity – I wanted to use a custom vanity URL to access the database and this is the supported way to do this. If want to know more about setting this up, be sure to check out this step by step guide 📖.

Once I’d got this setup, everything worked as expected apart from one small issue – when trying to get a token via REST so that I could call an Oracle Machine Learning model within the database I received the following error ❌.

b'{“error_message”:”\’DEMO1USER\’ unauthorized to \’use OML application\’”,”errorCode”:0,”request_id”:”OMLIDMREST-955f999622584d33a70″}’

I was calling the REST API via Python, but other methods such as Curl returned the same error (further details on calling the REST API to get a token and authenticate can be found here). The user had the relevant permissions so it was definitely something else 🤔.

The trick to fixing this is to update the URL that is called to obtain the token, rather than using this:

https://oml-cloud-service-location-url/omlusers/api/oauth2/v1/token

The URL needs to be updated to include the OCID of the OCI tenancy and the name of the database to connect to, like this:

For example, I was originally using this URL:

https://adb.brendg.co.uk/omlusers/api/oauth2/v1/token

I had to update this to:

https://adb.brendg.co.uk/omlusers/tenants/ocid1.tenancy.oc1..aaaaaabbjdjwnd3krfpjw23erghw4dxnvadd9w6j2hwcirea22qrtfam24mq/databases/DemoDB/api/oauth2/v1/token

The reason for this, is that when using a custom (vanity) URL to access the REST endpoint, it doesn’t know which tenancy and database you are trying to obtain an authentication token for, therefore you need to specify this in the REST endpoint.

Once I’d done this, it worked like magic 🪄