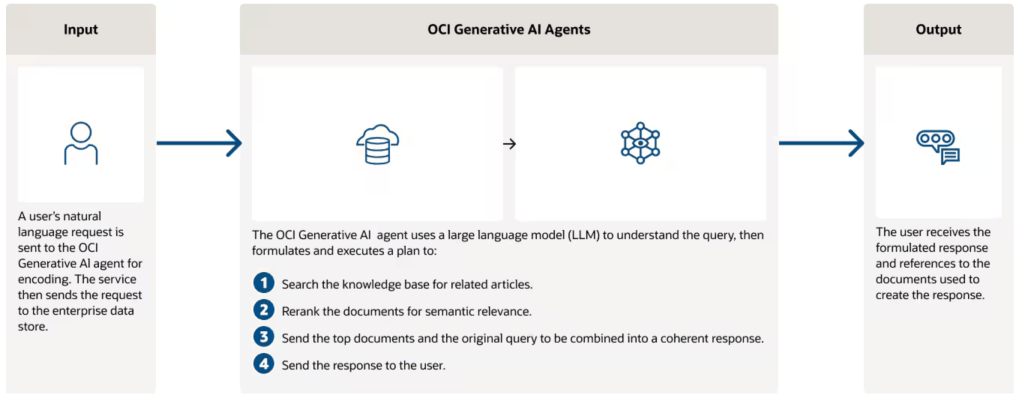

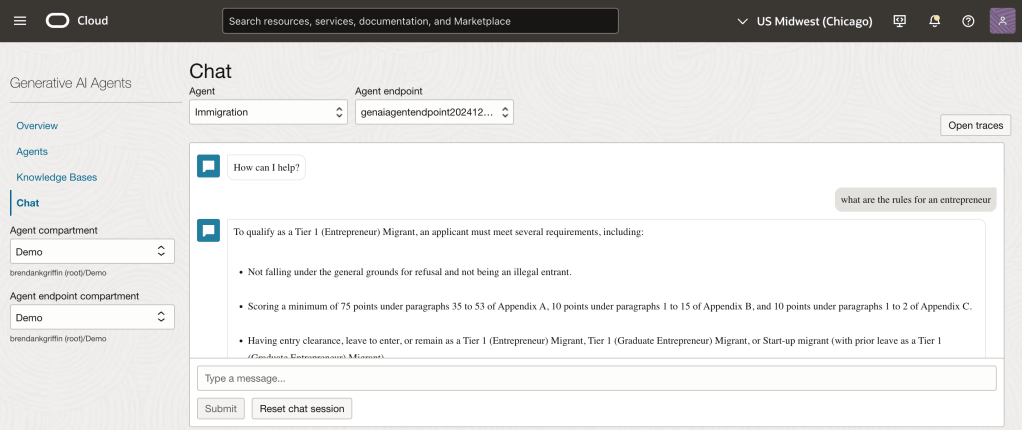

The Generative AI Agent service in OCI recently added the ability to add a SQL Tool, this enables an agent to generate a SQL query and optionally run the query against a database and return the results of the query to the agent 🤖. I created a short video that steps through how to use a SQL Tool ⚒️ with an agent, which can be found here 📼.

More recently (mid-July 2025) the SQL Tool has been further enhanced so that responses include the following:

- The raw output of the SQL query

- A conversational “LLM style” response

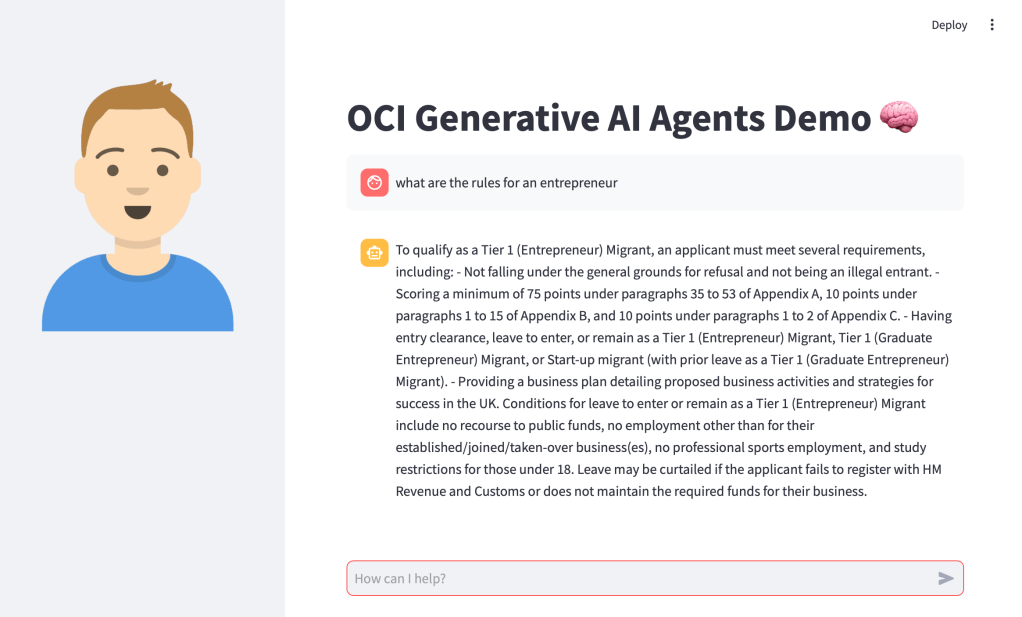

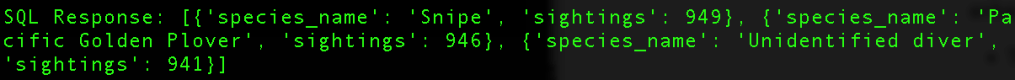

Previously a SQL Tool would only return the raw output of the SQL query, I found this quite useful as I could use Python packages such as matplotlib to visualise results, as of mid-July responses from the agent also include an LLM style conversational response, for example (taken from my agent that queries a database of bird sightings 🦅):

Raw Output of SQL Query

Conversational LLM Style Response

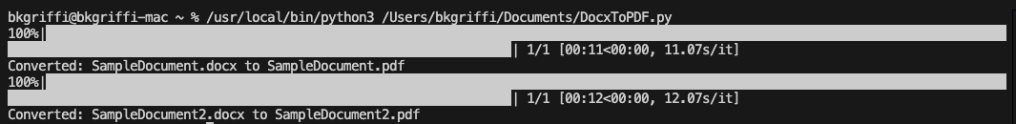

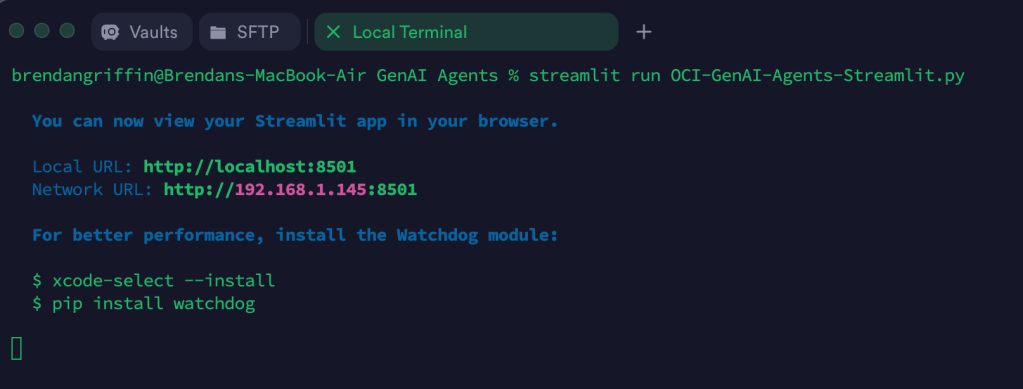

I’ve put together a short Python script that demonstrates how to get access to this data from a response, I typically use Streamlit as a front-end for the demo agents that I build, however to keep things simple, we’ll use the good old “shell” for this demo!

Here is the script –

import oci

textinput = "what were the 3 most popular birds in 1997"

config = oci.config.from_file(profile_name="DEFAULT")

service_ep = "https://agent-runtime.generativeai.uk-london-1.oci.oraclecloud.com"

agent_ep_id = "ocid1.genaiagentendpoint.oc1.uk-london-1.xwywwkz7bn5f5aogazpvkijnoj2u75yadsq"

generative_ai_agent_runtime_client = oci.generative_ai_agent_runtime.GenerativeAiAgentRuntimeClient(config,service_endpoint=service_ep)

create_session_response = generative_ai_agent_runtime_client.create_session(

create_session_details=oci.generative_ai_agent_runtime.models.CreateSessionDetails(

display_name="Session",

description="Session"),

agent_endpoint_id=agent_ep_id)

sess_id = create_session_response.data.id

response = generative_ai_agent_runtime_client.chat(

agent_endpoint_id=agent_ep_id,

chat_details=oci.generative_ai_agent_runtime.models.ChatDetails(

user_message=textinput,

session_id=sess_id))

output = response.data.traces[3].output

output = eval(output)

sql_response = output["result"]

print("")

print("SQL Response: " + str(sql_response))

text_response = response.data.message.content.text

print("")

print("Text Response: " + str(text_response))

To use this script you’ll need to update the following:

- textinput – update this to reflect the question to ask your agent, unless your agent is knowledgeable on bird sightings 🤣, this will need to update this

- config – update this to reflect the correct profile to use to authenticate with OCI as per: https://docs.oracle.com/en-us/iaas/tools/python/2.156.0/configuration.html

- service_ep – this is the service endpoint, of which there is a different endpoint for each OCI region – if your agent resides in the UK South region, you don’t need to change this 😀

- agent_ep_id – this is OCID of the endpoint for the specific agent, which can be obtained using the following instructions: https://docs.oracle.com/en-us/iaas/Content/generative-ai-agents/get-endpoint.htm

Finally make sure you have the latest version of the OCI SDK for Python, to upgrade to the latest version run the following command –

pip3 install oci --upgradeWhen run the output should look something like this:

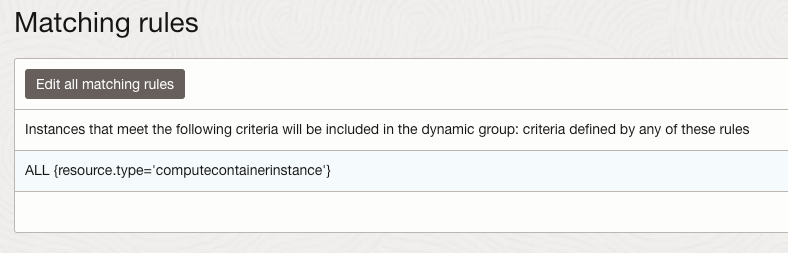

Here is an example of how I’ve used matplotlib (within a Streamlit front-end) to visualise results using the raw output of the SQL query.

As you can see below, it returns the conversational response, I then take the raw SQL output and use matplotlib to make it look pretty 💄 – I may put together a post on this too.

Thanks for reading!