Next up in my personal backlog (yes, I am that sad) was to play around with the document summarization capabilities included within Azure Cognitive Services for Language.

But what is this, you may ask?

Document summarization uses natural language processing techniques to generate a summary for documents. Extractive summarization extracts sentences that collectively represent the most important or relevant information within the original content. These features are designed to shorten content that could be considered too long to read – Taken from here.

I had a quick play around with document summarization (using this code sample for inspiration) and put together the Python script below and available here, which does the following:

- Takes a string of text and determines how many sentences are in this.

- Passes this to the document summarization endpoint to summarize. Requesting a summary that includes no more than half of the number of sentences in the original string provided.

- For example if 6 sentences are passed to the endpoint for summarization, the summary should include no more than 3 sentences.

- Prints the summarized output.

import requests

import json

import time

text = """The Sega Mega Drive, also known as the Sega Genesis in North America, was a popular video game console that was first released in Japan in 1988.

It was Sega's third home console and was designed to compete with Nintendo's popular NES and SNES consoles.

The Mega Drive was released in North America in 1989 and quickly gained a strong following among gamers thanks to its impressive graphics, sound quality, and large library of games.

Some of the most popular games for the console include Sonic the Hedgehog, Streets of Rage, and Phantasy Star.

The Mega Drive remained in production until 1997 and sold over 40 million units worldwide, cementing its place as one of the most beloved video game consoles of all time.""" # Text to be summarized

sentences = len(text.split(".")) / 2 # calculate how many sentences there are to be summarized (from the "text" variable), divide this by 2. Therefore if there are 6 setencnes to be summarised, the total number of sentences included in the summarization will be 3.

url = "https://ENDPOINT.cognitiveservices.azure.com/language/analyze-text/jobs?api-version=2022-10-01-preview" # Replace ENDPOINT with the relevant endpoint

key = "KEY" # Key for Azure Cognitive Services

headers = {"Ocp-Apim-Subscription-Key" : key}

payload = {

"displayName": "Summarizer",

"analysisInput": {

"documents": [

{

"id": "1",

"language": "en",

"text": text

}

]

},

"tasks": [

{

"kind": "ExtractiveSummarization",

"taskName": "Summarizer",

"parameters": {

"sentenceCount": sentences

}

}

]

}

r = requests. Post(url,headers = headers,data = json.dumps(payload))

results = r.headers["operation-location"]

time.sleep(10) # Being super lazy here and putting in a sleep, rather than polling for the results to see when they are available!

r = requests.get(results,headers = headers)

for s in r.json()["tasks"]["items"][0]["results"]["documents"][0]["sentences"]:

print(s["text"])

I used ChatGPT to generate the text I used for testing (on one of my favourite subjects I may add!):

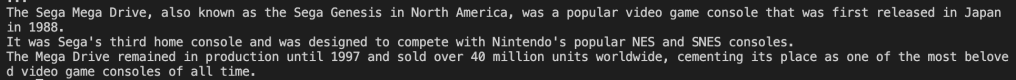

Here is the summary that it provided – it didn’t do too bad a job, did it?

I may start using this to summarize some of the super-long work e-mails I receive 😎.

Leave a comment