This is probably the strangest title that I’ve ever given a post!

I never seem to know when my bins (garbage for any Americans reading this) will be collected, I have three separate bins that are all on a slightly different collection cycle, rather than manually checking my local councils website I thought I’d write a script to scrape this data, to save me a few keystrokes and valuable seconds ⏲️. In all honesty, this was just an excuse to spend some quality time with Python 🐍.

Fortunately for me, my local council’s website requires no login, also the page that returns the collection schedule for an address is static (in that the returned URL doesn’t appear to change).

If I head over to https://www.hull.gov.uk/bins-and-recycling/bin-collections/bin-collection-day-checker, enter my postcode and select my house number it returns the following page, with the URL – https://www.hull.gov.uk/bins-and-recycling/bin-collections/bin-collection-day-checker/checker/view/10093952819.

What I then needed to do, was to figure out an approach to pull the data from the returned page so that I could output this from a Python script. It turned out the Beautiful Soup (a Python library for pulling data out of HTML and XML files) could be used to do this.

Before I could use Beautiful Soup to do the parsing, I needed to grab the page itself, to do this I used the Requests library.

Firstly, I needed to install these libraries, by running “pip install requests” and then “pip install beautifulsoup4” from the command line.

I then used the requests library to request the page and create a response object (“r“) that would hold the contents of the page.

import requests

r = requests.get("https://www.hull.gov.uk/bins-and-recycling/bin-collections/bin-collection-day-checker/checker/view/10093952819")

Once I had the page, I could then use Beautiful Soup to analyze it, to do this I began by importing the module and then creating a new Beautiful Soup object using the “r” object created by Requests (specifically r.text), which contained the raw HTML output of the page.

import requests

soup = bs4.BeautifulSoup(r.text, features="html.parser")

I then created a variable to hold the collection date extracted from the page (dates), which I will print to the screen at the end of the script.

I also imported the os library (which I use for extracting the collection date from the data returned).

import os

dates = ""

This is where the fun now began! I opened a web browser and navigated to the page and then viewed the source (CTRL + U on Chrome/Edge on Windows) as I needed to figure out exactly where the data I needed resided within the page, after much scrolling I found it!

I could see that the data for the black bin was contained within the class “region region-content“. I used the following to extract the data I needed from this (the date of collection).

# Use Beautiful Soup to find the class that the data is contained within "region region-content"

blackbin = soup. Find(class_="region region-content")

# Find the parent div for this class (which I need to find the div containing the black bin data)

div = blackbin.find_parent('div')

# Find all the span tags within this div, the data is contained within a span tag

span = div.find_all('span')

# The black bin date is within the second span tag, so retrieve the data from this (index 1) and split using the ">" delimiter

spantext = str(span[1]).split(">")

# Split the span tag for index 1 using "<" as a delimiter to easily remove the other text we don't need, it's messy but it works!

date = spantext[1].split("<")

# Retrieve index 0 which is the full date

blackbindate = date[0]

# Add the data returned "blackbindate" to the "dates" variable, prefixing this with the colour of the bin

dates += "Black Bin " + "- " + blackbindate + "," + "\n"

For the blue bin, I took a slightly different approach. I searched for the style tag that this was using, which was “color:blue;font-weight:800“

# Find all tags using the style color:blue;font-weight:800

blue = soup.find_all(style="color:blue;font-weight:800")

# Select the second tag returned, this one contains the actual date and then split using the ">" delimiter

bluebin = str(blue[1]).split(">")

# Split the data returned further using the delimiter "<", to easily remove the other text we don't need, it's messy but it works!

bluebincollection = bluebin[1].split("<")

# Return index 0 which is the full date

bluebindate = bluebincollection[0]

# Add the returned date "bluebindate" to the dates variable, prefixing this with the colour of the bin

dates += "Blue Bin " + "- " + bluebindate + "," + "\n"

Lastly, for my brown bin I used a slightly variation of the approach I used for the blue bin, except this time I searched for the style tag “color:#654321;font-weight:800”

brown = soup.find_all(style="color:#654321;font-weight:800")

brownbin = str(brown[1]).split(">")

brownbincollection = brownbin[1].split("<")

brownbindate = brownbincollection[0]

dates += "Brown Bin " + "- " + brownbindate + "," + "\n"

Lastly I returned the dates variable, which contained the dates extracted from the web page.

print(dates)

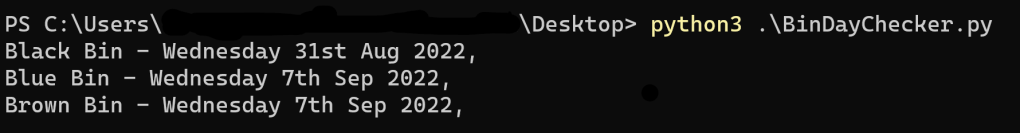

Here is the output in all it’s glory!

The script I wrote can be found on GitHub.

Leave a comment